Monitor Security and Best Practices with

Kyverno and Policy Reporter

Foreword

This article, like my previous article about Falco and Falcosidekick, is a practical guide to installing, configuring and using Kyverno with additional monitoring using Policy Reporter.

Background

As I described in my last article, there is no single tool to make a Kubernetes cluster secure. There are different levels that need to be considered and for which there are mechanisms to make them securer. While Falco increases runtime security through its rule engine and alerts, there are some best practices that should already be taken into account already when creating Kubernetes manifests.

In order to observe, monitor, and, if possible, automate these best practices from the start in my company's internal Kubernetes cluster, we looked for a suitable solution.

Requirement analysis

We built this cluster as an internal playground because most of the developers had little to no previous experience with Kubernetes. Therefore, the solution should work as Kubernetes native as possible to minimize additional learning effort. The main focus is on defining and monitoring best practices and security standards as rules. Additional features are optional but not required. Some example rule would be to ensure that Pods have defined resource requests and limits, and prod like environments using NetworkPolicies to configure possible Pod communication.

Possibilities

At this time there is one deprecated native resource I would like to mention and two established open-source solutions.

-

Pod Security Policies is defined as a cluster-level resource that controls security sensitive aspects of the pod specification. This resource is available in every Kubernetes cluster, but is also deprecated and will be removed in the near future. The core aspect is to validate pods against a fixed set of rules to improve the security of your cluster. The drawbacks of this solution are that the possible rules are fixed and not extensible, also only pods can be validated, and the configuration is tied to the user/resource that created the pod.

-

Open Policy Agent Gatekeeper is described as a validating (mutating TBA) webhook that enforces CRD-based policies executed by Open Policy Agent, a policy engine for Cloud Native environments hosted by CNCF. OPA Gatekeeper is a very popular solution and uses Rego as a policy language. It is able to enforce and audit policies and mutate resources.

-

Kyverno is described as a policy engine designed for Kubernetes. Kyverno policies can validate, mutate, and generate Kubernetes resources. The Kyverno CLI can be used to test policies and validate resources as part of a CI/CD pipeline. As with OPA, it is possible to audit or enforce validation policies. Kyverno uses only YAML and CRDs to define policies in a single namespace or the entire cluster.

Decisions

Pod Security Policies are deprecated and not that easy to configure. They have a fixed set of rules and are not extensible, but the rules themselves are still valid and valuable. Thankfully, both Open Policy Agent Gatekepper as well as Keyverno offer the Pod Security Policies as ready to use policies for their respective engines. So, both are a great replacement for PSP. Both solutions support all types of resources and are not restricted to Pods like PSP.

OPA Gatekeeper is able to validate in audit or enforce mode as well as mutate resources based on policies written in Rego, allowing all the capabilities and features of a programming language instead of static configurations. Kyverno can also validate, in audit or enforce mode, mutate and generate resources based on policies defined in pure YAML. It offers features such as ConfigMap values, JMESPath and Kubernetes apiCalls to support dynamic policies based on the current state of your cluster.

OPA Gatekepper supports high availability, provides metrics, and can be used outside of Kubternetes. Kyverno has an open proposal to support metrics soon and plans HA support for version 1.4.0, but is limited to the Kubernetes ecosystem.

In making our decision, we focused mainly on simplicity and support for validation and monitoring. We chose Kyverno for one important reason. And that is that it is much easier for us to define policies with simple YAML-based manifests. It supports all the features and more to fulfill our requirements. We can define policies for validation in audit or enforce mode and are able to automate behavior with mutate and generate policies.

Because our Kubernetes cluster is only intended as a playground, high availability does not currently play a major role.

In audit mode Kyverno creates CRDs called PolicyReports and ClusterPolicyReports to provide the validation results. These Reports cannot be monitored very well in terms of status and Kyverno provides no metrics about validation results yet. This is where the Policy Reporter comes in, which I will discuss in more detail later.

Environment

- Multi Node Cluster hosted on Hetzner Cloud

- Ubuntu 20.04 Operating System

- RKE Cluster managed with Rancher v2.5.7 and Kubernetes v1.20.2

Getting Started with Kyverno

Installing Kyverno

Kyverno can be installed with Helm or with static manifests using kubectl. Because I want to profit from the additional features like PSP based default policies in audit mode and to tweak the configuration for my environment, I will use the provided Helm Chart.

Adding the Helm repository:

helm repo add kyverno https://kyverno.github.io/kyverno/

helm repo update

I want to tweak the values.yaml for Kyverno because:

- I am using RKE and have a few more namespaces to be ignored from Kyverno.

- I want to generate nonstandard CRDs with Kyverno

# values.yaml

# this snipped includes only the changeset

# This configuration installs with the "default" value 10 PSP based policies in audit mode.

# This improves your cluster security without additional effort.

#

# Supported- default/restricted

# For more info- https://kyverno.io/policies/pod-security

podSecurityStandard: default

# filter resources or namespaces to be validated from Kyverno https://kyverno.io/docs/installation/#resource-filters

config:

resourceFilters:

- "[Event,*,*]"

- "[*,kube-system,*]"

- "[*,kube-public,*]"

- "[*,kube-node-lease,*]"

- "[Node,*,*]"

- "[APIService,*,*]"

- "[TokenReview,*,*]"

- "[SubjectAccessReview,*,*]"

- "[*,kyverno,*]"

- "[Binding,*,*]"

- "[ReplicaSet,*,*]"

- "[ReportChangeRequest,*,*]"

- "[ClusterReportChangeRequest,*,*]"

# additional RKE / Rancher specific namespaces

- "[*,cattle-system,*]"

- "[*,cattle-monitoring-system,*]"

- "[*,fleet-system,*]"

With these values I install Kyverno into the automatically created kyverno namespace.

helm install kyverno kyverno/kyverno --namespace kyverno --create-namespace

After a few minutes you should see the Kyverno Pod up and running.

kubectl get pod -n kyverno

NAME READY STATUS RESTARTS AGE

kyverno-6b456946c-4927d 1/1 Running 0 96s

You should also see the created default Pod Security Policies provided from Kyverno in audit mode. Background is a configuration for validation policies and specifies whether the policy should audit already existing resources (value true) or only newly created resources (value false). This works also for Policies in enforce mode.

# cpol is the short version for clusterpolicies

kubectl get cpol

NAME BACKGROUND ACTION

disallow-add-capabilities true audit

disallow-host-namespaces true audit

disallow-host-path true audit

disallow-host-ports true audit

disallow-privileged-containers true audit

disallow-selinux true audit

require-default-proc-mount true audit

restrict-apparmor-profiles true audit

restrict-sysctls true audit

Example Policy

Let us see how a manifest of one of those policies looks like.

kubectl get cpol disallow-host-path -o yaml

# I removed unnessecary information like managed fields

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

annotations:

meta.helm.sh/release-name: kyverno

meta.helm.sh/release-namespace: kyverno

policies.kyverno.io/category: Pod Security Standards (Default)

policies.kyverno.io/description: HostPath volumes let pods use host directories

and volumes in containers. Using host resources can be used to access shared

data or escalate privileges and should not be allowed.

labels:

app.kubernetes.io/managed-by: Helm

name: disallow-host-path

spec:

background: true

rules:

- match:

resources:

kinds:

- Pod

name: host-path

validate:

message: HostPath volumes are forbidden. The fields spec.volumes[*].hostPath

must not be set.

pattern:

spec:

=(volumes):

- X(hostPath): "null"

validationFailureAction: audit

As you can see, each policy can define multiple rules. A rule has a match section which uses different features to selected resources to validate. This policy validates all resources of Kind Pod. Also, common filters are selector for label selection, namespaceSelector to filter resources in selected namespaces, resource name with wildcard support. For detailed information have a look into the Kyverno Documentation.

Each rule has a unique name within the same policy. The validate part is where the magic happens. The message is returned by kubectl for a policy in enforce mode if your resource definition failed. In audit mode, it is displayed in the generated PolicyReportResult for each validated resource.

With a pattern, you define what a resource must satisfy to pass validation, the structure, and the available configurations are based on the validated resource. In this example, Pods with defined volumes are validated. If a Pod has at least one volume defined, it checks that no definition uses hostPath, regardless of its value. For more information about validation operations, see the Kyverno Documentation.

For more examples, consider the Best Practices Policies from Kyverno.

Kyverno CRDs have also full support for kubectl explain.

kubectl explain ClusterPolicy.spec.rules.match

KIND: ClusterPolicy

VERSION: kyverno.io/v1

RESOURCE: match <Object>

DESCRIPTION:

MatchResources defines when this policy rule should be applied. The match

criteria can include resource information (e.g. kind, name, namespace,

labels) and admission review request information like the user name or

role. At least one kind is required.

FIELDS:

clusterRoles <[]string>

ClusterRoles is the list of cluster-wide role names for the user.

resources <Object>

ResourceDescription contains information about the resource being created

or modified. Requires at least one tag to be specified when under

MatchResources.

roles <[]string>

Roles is the list of namespaced role names for the user.

subjects <[]Object>

Subjects is the list of subject names like users, user groups, and service

accounts.

Define custom validation Policies

The goal is to define our requirements and best practices as validation policies. We can choose between a Policy and ClusterPolicy. The only difference is that a Policy belongs to a single Namespace, while a ClusterPolicy is applied to all namespaces. In the most cases you are using a ClusterPolicy combined with the resourceFilter and namespaceSelectors to validate only resources in a subset of namespaces in your cluster.

Check Resource Requests and Limits are configured

The aim of this policy is to check whether Pods outside our resourceFilter have configured resources. We want to audit this configuration, to enforce rules are good but often have the disadvantage that they can influence the productivity during the development. Especially when simple applications are to be tested and tools evaluated. In addition, at the beginning of the development the required resources may be unclear and lead to unnecessarily high or too low values.

With monitoring for this missing configuration, I can know where to add resource constraints if needed.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: check-resource-requests-and-limits-configured

annotations:

policies.kyverno.io/category: Best Practices

spec:

rules:

- match:

# select all Pod resources

resources:

kinds:

- Pod

name: check-for-resource-requirements

validate:

# message for logs

message: resource requests and limits should be configured

pattern:

spec:

containers:

# check if container spec has resource configured

- resources:

limits:

# check if spec.containers[*].limits.cpu is configured with any value

cpu: "?*"

memory: "?*"

requests:

cpu: "?*"

memory: "?*"

# validation mode

validationFailureAction: audit

You may want to ask if this also works for Pods created by other resources like Deployment, Job, StatefulSet, etc. If you configure your Policy to validate Pods it will automatically extend your Policy with additional rules for these Kind of resources. You can check/verify this behavior by checking your newly created Policy:

kubectl get clusterpolicy.kyverno.io/check-resource-requests-and-limits-configured -o yaml

# this snipped shows only metainformation and

# a single autogenerated rule as example

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

annotations:

pod-policies.kyverno.io/autogen-controllers: DaemonSet,Deployment,Job,StatefulSet,CronJob

policies.kyverno.io/category: Best Practices

policies.kyverno.io/severity: high

spec:

background: true

rules:

...

- match:

resources:

kinds:

- DaemonSet

- Deployment

- Job

- StatefulSet

name: autogen-check-for-resource-requirements

validate:

message: resource requests and limits should be configured

pattern:

spec:

template:

spec:

containers:

- resources:

limits:

cpu: ?*

memory: ?*

requests:

cpu: ?*

memory: ?*

...

Important in this snipped is the automatically created annotation pod-policies.kyverno.io/autogen-controllers with DaemonSet,Deployment,Job,StatefulSet,CronJob as value. By defining this annotation yourself, you can change this behavior. To disable it, use none as value. See the Kyverno Documentation for details.

Check validation Results

To check validation results we need some kind of workload to validate. So, I create a test-kyverno namespace and run an nginx as sample workload.

kubectl create ns test-kyverno

kubectl run nginx --image nginx:alpine -n test-kyverno

For each created namespace, which is not on the resourceFilter, Kyverno creates a PolicyReport. This resource includes all validation results for each executed validation rule and resource in this namespace. You can get a summary as follows:

kubectl get polr -n test-kyverno

NAME PASS FAIL WARN ERROR SKIP AGE

polr-ns-test-kyverno 9 1 0 0 0 1m

As you see, we have 9 passed and 1 failed validation result. We do not see how many resources have been validated and which validation fails. To get more details we can describe the resource and filter for status fail.

At this time Kyverno PolicyReportResults can pass or fail.

kubectl describe polr -n test-kyverno | grep -i "status: \+fail" -B10

Message: validation error: resource requests and limits should be configured. Rule check-for-resource-requirements failed at path /spec/containers/0/resources/requests/

Policy: check-resource-requests-and-limits-configured

Resources:

API Version: v1

Kind: Pod

Name: nginx

Namespace: test-kyverno

UID: 28508c80-73d6-4836-9895-e1fc80069c90

Rule: check-for-resource-requirements

Scored: true

Status: fail

Now we see that our nginx Pod fails resource validation because a basic kubectl run does not configure resource constraints by default. We need to improve our Pod declaration to fulfill our custom policy.

kubectl delete po nginx -n test-kyverno

kubectl run nginx --image nginx:alpine --requests 'cpu=1m,memory=10M' --limits='cpu=5m,memory=15M' -n test-kyverno

Let us check again.

kubectl get polr -n test-kyverno

NAME PASS FAIL WARN ERROR SKIP AGE

polr-ns-test-kyverno 10 0 0 0 0 5m

Now the nginx Pod does all the validations, but as you may have noticed, it is not so easy and convenient to check the validation status for validation rules. An additional problem comes into play when you have multiple namespaces and want to know how many validation failures occur across the cluster. You need to check each PolicyReport in the namespaces that your policy affects.

For this Problem I created a tool called Policy Reporter.

Monitor validation Results with Policy Reporter

The story behind Policy Reporter

As I described in the requirement analysis, our goal was to define our best practices as policies, but also to monitor them. As this was not so easy with the given tools, I started to build my own solution for this problem. I am a contributor to Falcosidekick, which inspired me to build something similar for the Kyverno ecosystem.

To be able to monitor the validation results, the first task was to provide Prometheus metrics for common monitoring tools such as Grafana. Also included is an optional integration for Prometheus Operator and three configurable Grafana dashboards.

The second part was to extend the monitoring capabilities by sending notifications to additional tools when new validation failures are detected. Since Policy Reporter already supported Grafana with metrics, I decided to start supporting Grafana Loki as a log aggregator solution. In the current version, in addition to Loki, it also supports Elasticsearch, Microsoft Teams, Discord and Slack.

After the basic functionality was given, I realized that not everyone has a running monitoring solution in their cluster. Also, there were users who just wanted to try out or demonstrate Kyverno once, and therefore did not want to put any additional effort into monitoring. For this reason, in addition to Policy Reporter, I developed the Policy Reporter UI. It is installed as an additional app and provides a standalone interface with charts, tables and filters for a flexible overview of all available validation results.

As a note, I would like to mention that the CRD for PolicyReport is a prototype for a new Kubernetes standard. It is possible that in the future other tools like kube-bench will also use these PolicyReports and thus also be supported by PolicyReporter.

Installing Policy Reporter

Policy Reporter provides a Helm Chart to install it together with the Policy Reporter UI.

Adding the Policy Reporter repository:

helm repo add policy-reporter https://fjogeleit.github.io/policy-reporter

helm repo update

Installation together with the optional Policy Reporter UI:

helm install policy-reporter policy-reporter/policy-reporter --set ui.enabled=true -n policy-reporter --create-namespace

After a few seconds there should be 2 Pods up and running.

kubectl get pods -n policy-reporter

NAME READY STATUS RESTARTS AGE

policy-reporter-747c456dcb-j5j7f 1/1 Running 0 15s

policy-reporter-ui-ddd888675-rv9s8 1/1 Running 0 15s

Using Policy Reporter UI

To see at least one failed validation, we create another workload that violates our resource validation.

kubectl run nginx-invalid --image nginx:alpine -n test-kyverno

Now we can port-forward the UI to localhost to be able to use it.

kubectl port-forward service/policy-reporter-ui 8082:8080 -n policy-reporter

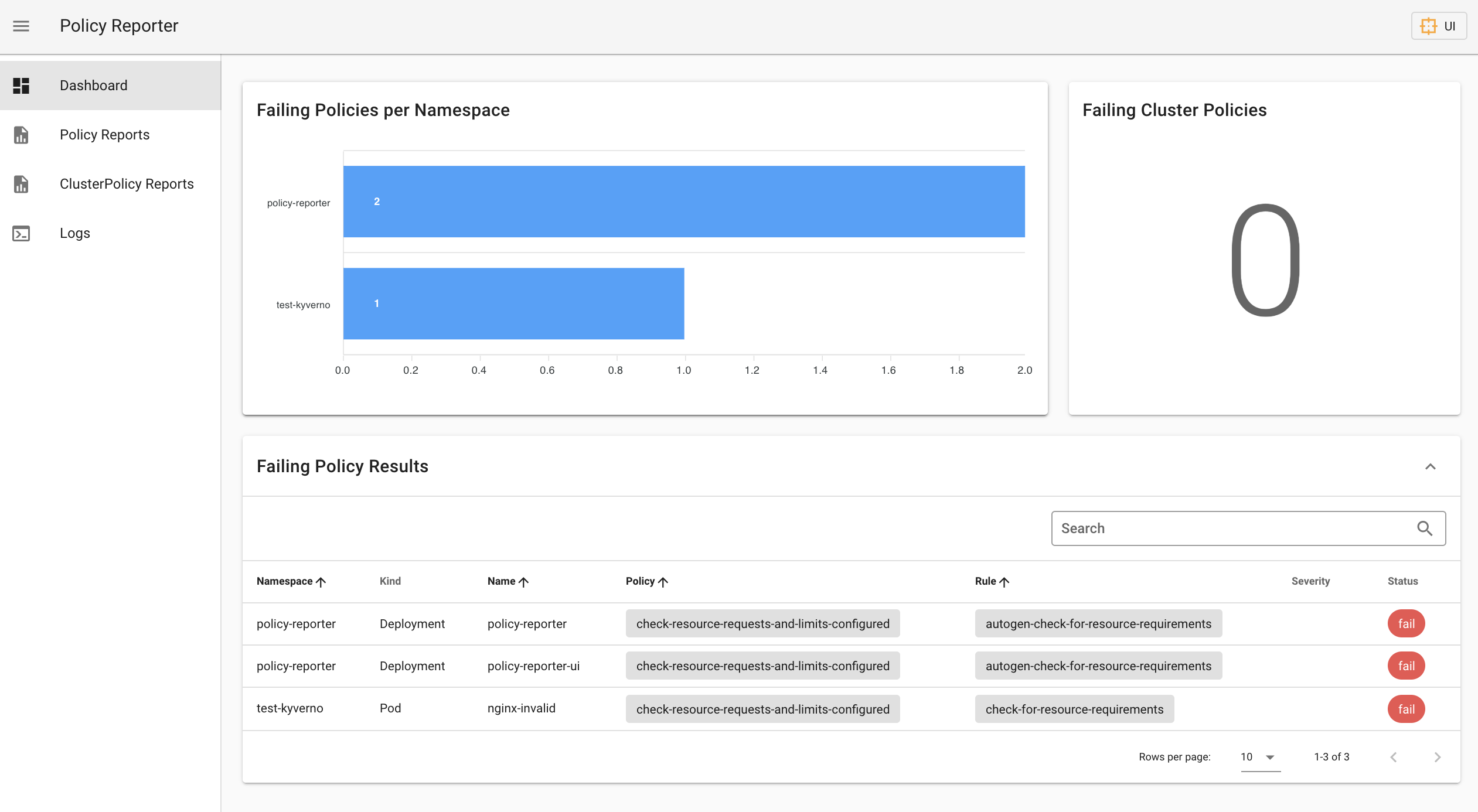

Open http://localhost:8082/ in the browser and you will see the dashboard with our failed nginx-invalid pod as well as the failed policy-report and policy-report-ui deployments. These dashboards display all failed or erroneous validation results.

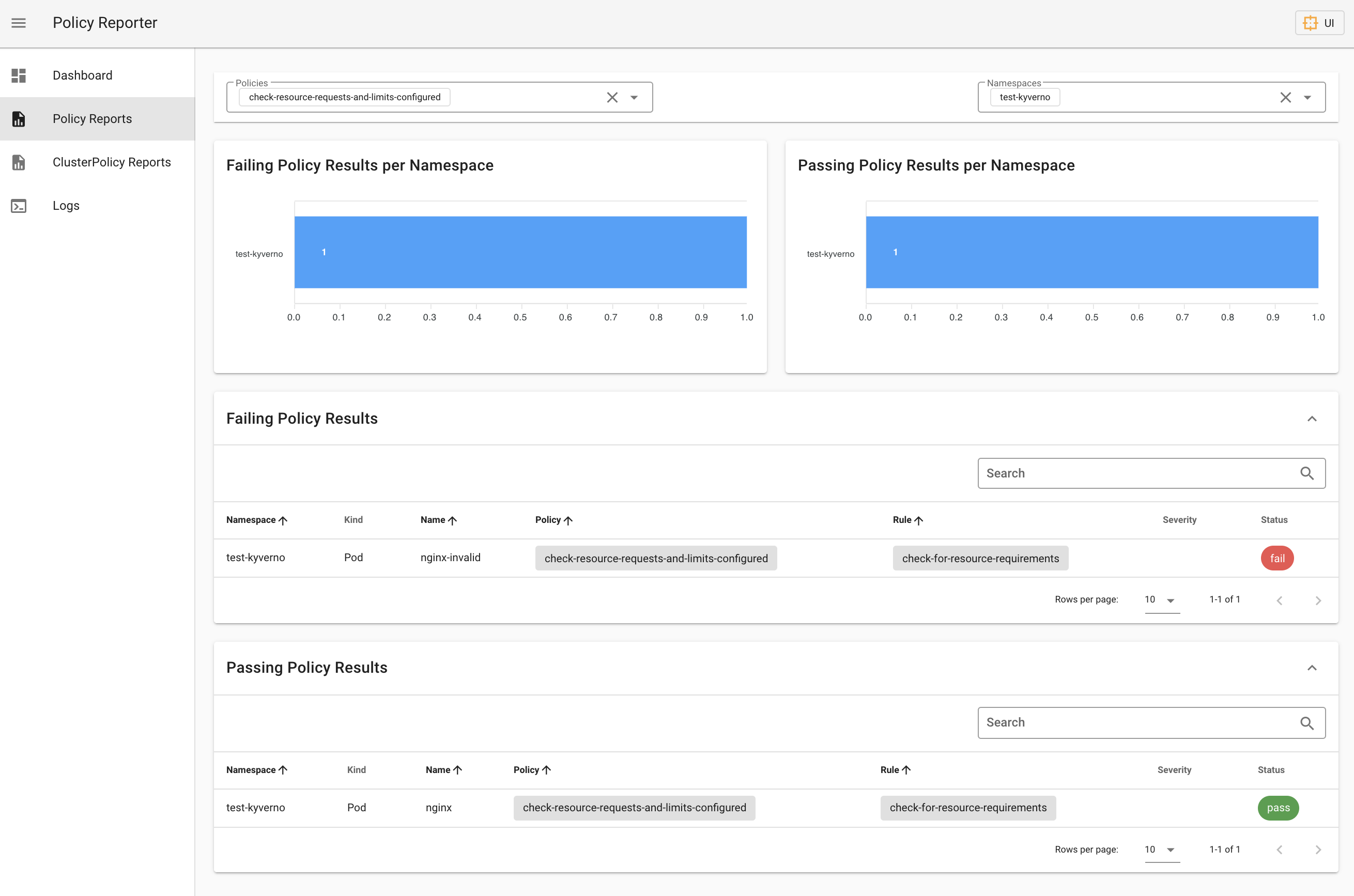

Under Policy Reports you can view all validation results for the selected set of policies with an optional namespace filter. This sample screen shows all the results of our custom ClusterPolicy for the namespace test-kyverno only.

ClusterPolicy Reports refers to the second CRD provided for validation results, a ClusterPolicyReports is generated when you have validation against namespaces or other cluster-scoped resources.

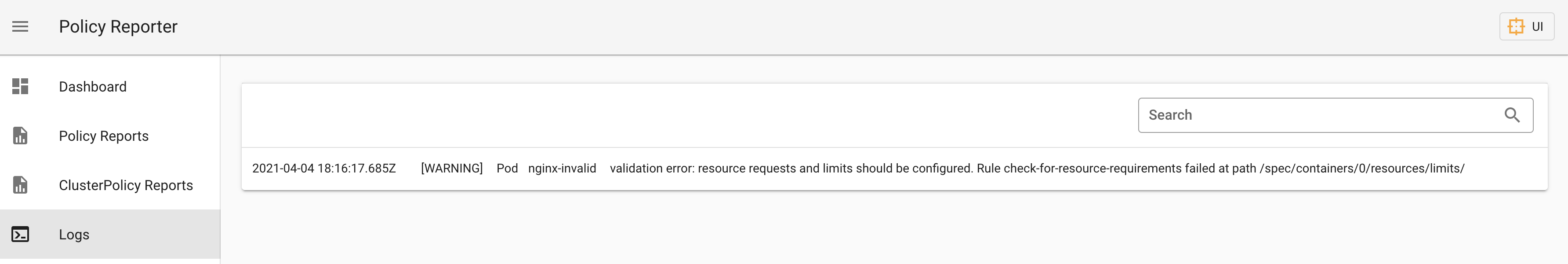

Logs provides a list of the last 200 failed validation results by default. Both are customizable in the Helm Chart. 'Warning' is the priority of your policy, it is the default for all policies and can be configured in Policy Reporter or by configuring 'Severity' in your Kyverno policy, which is available since Kyverno 1.3.5.

Using Grafana

To use Grafana as monitoring solution for more production like use cases you can use the provided optional monitoring Sub Chart.

You can install it locally with the kube-prometheus-stack Helm Chart.

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

Installation with default values:

helm install monitoring prometheus-community/kube-prometheus-stack --namespace monitoring --create-namespace

After a few minutes all pods should be in a running state.

kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-monitoring-kube-prometheus-alertmanager-0 2/2 Running 0 7m35s

monitoring-grafana-58bf4946c8-tt8zn 2/2 Running 0 7m52s

monitoring-kube-prometheus-operator-5dc569645-v7z29 1/1 Running 0 7m52s

monitoring-kube-state-metrics-6bfb865c69-fvncg 1/1 Running 0 7m52s

monitoring-prometheus-node-exporter-fmdpt 1/1 Running 0 7m52s

prometheus-monitoring-kube-prometheus-prometheus-0 2/2 Running 1 7m32s

Access the installed Grafana with a port-forward like Policy Reporter UI before:

kubectl port-forward service/monitoring-grafana 8080:80 -n monitoring

You can access Grafana with http://localhost:8080 and login with username admin and password prom-operator.

Now let us upgrade Policy Reporter with monitoring enabled and configured for our needs.

# values.yaml

# deploy the UI and monitoring together, both are independent from each other

ui:

enabled: true

monitoring:

enabled: true

namespace: monitoring

serviceMonitor:

labels:

release: monitoring

helm upgrade policy-reporter policy-reporter/policy-reporter -f values.yaml -n policy-reporter

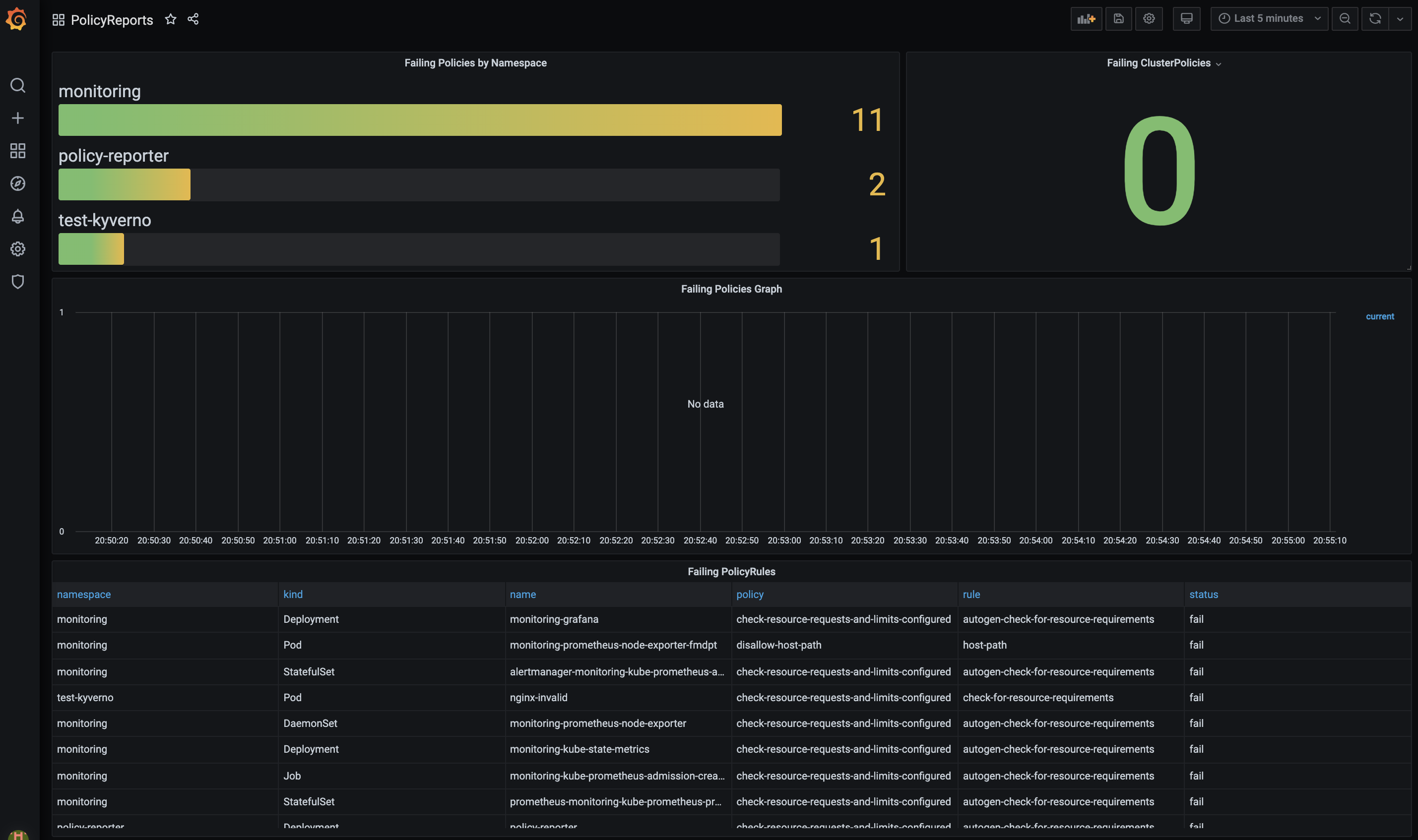

After a refresh you should see three new available dashboards labeled with Policy Reporter.

It can take a few minutes until the created metrics are crawled and the results are shown in the Dashboards. After 2 minutes I got the following information from the Policy Reporter dashboard.

Conclusion

Kyverno is a simple yet powerful tool. It already provides a great security update with its included PSP alike policies. With its YAML based policy engine it offers a quick start for users with some Kubernetes experience. It was possible to define common best practices as policies with little effort and to set up monitoring with familiar tools using Policy Reporter. We started with audit policies to see how the policies would affect our cluster and to save our developers additional effort initially. We can always decide to move policies from audit to enforce mode to further improve security. In addition to validation, Kyverno offers other helpful tools such as resource mutation and generation, but these will be the subject of an upcoming article.